How to Build a In-Memory Cache in Go Using Generics With TTL

There is a famous saying in computer science:

"There are only two hard things in Computer Science: cache invalidation and naming things." — Phil Karlton

It is challenging to balance performance (caching aggressively) with accuracy (ensuring users see the latest data immediately).

If you cache too much, users see old data. If you invalidate too often, your databases get hammered with traffic.

This is where performance optimization becomes critical. Whether you’re building a web application or a microservice, reducing API latency is key to a good user experience.

Why In-Memory Caching?

In-memory caching solves this by storing frequently accessed data in RAM, avoiding slow database queries. This significantly improves system performance.

Go is particularly well-suited for this task. Its lightweight concurrency model (goroutines and sync primitives) allows us to build highly efficient, thread-safe caches that handle high read/write throughput with ease.

Note: There are some popular packages like go-cache that can be used directly.

Basic In-Memory Cache

Now, let’s explore how to implement a basic in-memory cache in Go.

Since generics were introduced in Go 1.18, you might prefer a generic implementation. However, for simplicity, we'll start with map[string]interface{}.

For a basic cache, we need 4 main operations:

-

Set(key, value): Adds or updates a value for a given key. It uses a write lock (

Lock) to ensure thread safety during the update.func (c *Cache) Set(key string, value interface{}) { c.mu.Lock() defer c.mu.Unlock() c.data[key] = value } -

Get(key): Retrieves the value associated with a key. It uses a read lock (

RLock), allowing multiple concurrent readers.func (c *Cache) Get(key string) (interface{}, bool) { c.mu.RLock() defer c.mu.RUnlock() value, ok := c.data[key] return value, ok } -

Delete(key): Removes a specific key-value pair from the cache using a write lock.

func (c *Cache) Delete(key string) { c.mu.Lock() defer c.mu.Unlock() delete(c.data, key) } -

Clear(): Resets the cache by replacing the map with a new one using a write lock.

func (c *Cache) Clear() { c.mu.Lock() defer c.mu.Unlock() c.data = make(map[string]interface{}) }

We use map[string]interface{} to store values of any type against string keys. While flexible, this requires runtime type assertions and isn't type-safe.

The Structure

We define a Cache struct containing the map and a sync.RWMutex for thread safety.

type Cache struct {

data map[string]interface{}

mu sync.RWMutex

}

Implementation

package main

import (

"fmt"

"sync"

"time"

)

// Cache represents an in-memory key-value store.

type Cache struct {

data map[string]interface{}

mu sync.RWMutex

}

// NewCache creates and initializes a new Cache instance.

func NewCache() *Cache {

return &Cache{

data: make(map[string]interface{}),

}

}

// Set adds or updates a key-value pair in the cache.

func (c *Cache) Set(key string, value interface{}) {

c.mu.Lock()

defer c.mu.Unlock()

c.data[key] = value

}

// Get retrieves the value associated with the given key from the cache.

func (c *Cache) Get(key string) (interface{}, bool) {

c.mu.RLock()

defer c.mu.RUnlock()

value, ok := c.data[key]

return value, ok

}

// Delete removes a key-value pair from the cache.

func (c *Cache) Delete(key string) {

c.mu.Lock()

defer c.mu.Unlock()

delete(c.data, key)

}

// Clear removes all key-value pairs from the cache.

func (c *Cache) Clear() {

c.mu.Lock()

defer c.mu.Unlock()

c.data = make(map[string]interface{})

}

func main() {

cache := NewCache()

// Adding data to the cache

cache.Set("key1", "value1")

cache.Set("key2", 123)

// Retrieving data from the cache

if val, ok := cache.Get("key1"); ok {

fmt.Println("Value for key1:", val)

}

// Deleting data from the cache

cache.Delete("key2")

if val, ok := cache.Get("key2"); ok {

fmt.Println("Value for key1:", val)

}

// Clearing the cache

cache.Clear()

time.Sleep(time.Second) // Sleep to allow cache operations to complete

}

We get the folowing output

gk@jarvis:~/exp/code/cache/basic$ go run main.go

Value for key1: value1

We can't see key2 as it has been deleted.

Key Findings

We have a working cache.

We used a mutex for locking to avoid concurrent access. If you are new to Mutexes, I recommend exploring Mutex in Golang.

We understood the importance of caching and how it helps improve performance and efficiency.

However, if you notice, the current cache doesn’t support the concept of expiry of keys (TTL - Time To Live).

Items stay in the cache forever unless manually deleted.

Let’s add expiry support to our cache, which means after a defined TTL, the item expires and is removed from the cache.

Adding TTL Support

Since each cache entry can have a defined TTL, we create a CacheItem struct that contains interface{} for storing value and an expiry time field.

How it Works

-

Adding Data

When you add data, you must specify how long it should live.

Expiry Time = Current Time + TTL -

Retrieving Data

This is the smartest part.

In this lazy expiration approach, the cache does not run a background timer to delete old keys (which consumes CPU). Instead, it checks at the moment you ask for the data.

Lookup: It finds the item in the map.

Check: Is Current Time > Expiry Time?

If Yes (Expired): It explicitly deletes the item right now and tells you it wasn't found (false).

If No (Valid): It returns the value.

Thread-Safe Cache with TTL

We just need to update the cache structure, set function, and get function.

-

The Structure: We define

CacheItemto hold the value and its expiration time. TheCachestruct now uses a map ofCacheItem.type CacheItem struct { value interface{} expiry time.Time } type Cache struct { data map[string]CacheItem mu sync.RWMutex } -

Set(..., ttl): Sets the value with an expiration time calculated as

time.Now().Add(ttl).func (c *Cache) Set(key string, value interface{}, ttl time.Duration) { c.mu.Lock() defer c.mu.Unlock() c.data[key] = CacheItem{ value: value, expiry: time.Now().Add(ttl), } } -

Get(key): Checks if the item exists and hasn't expired. If expired, it upgrades to a write lock to delete the item (lazy expiration).

func (c *Cache) Get(key string) (interface{}, bool) { c.mu.RLock() item, ok := c.data[key] if !ok { c.mu.RUnlock() return nil, false } if item.expiry.Before(time.Now()) { c.mu.RUnlock() c.mu.Lock() defer c.mu.Unlock() delete(c.data, key) // Lazy deletion return nil, false } c.mu.RUnlock() return item.value, true }

Implementation

package main

import (

"fmt"

"sync"

"time"

)

// CacheItem represents an item stored in the cache with its associated TTL.

type CacheItem struct {

value interface{}

expiry time.Time // TTL for a key

}

// Cache represents an in-memory key-value store with expiry support.

type Cache struct {

data map[string]CacheItem

mu sync.RWMutex

}

// NewCache creates and initializes a new Cache instance.

func NewCache() *Cache {

return &Cache{

data: make(map[string]CacheItem),

}

}

// Set adds or updates a key-value pair in the cache with the given TTL.

func (c *Cache) Set(key string, value interface{}, ttl time.Duration) {

c.mu.Lock()

defer c.mu.Unlock()

c.data[key] = CacheItem{

value: value,

expiry: time.Now().Add(ttl),

}

}

// Get retrieves the value associated with the given key from the cache.

// It also checks for expiry and removes expired items.

func (c *Cache) Get(key string) (interface{}, bool) {

c.mu.RLock()

item, ok := c.data[key]

if !ok {

c.mu.RUnlock()

return nil, false

}

if item.expiry.Before(time.Now()) {

c.mu.RUnlock()

// Upgrade to write lock only if we need to delete

c.mu.Lock()

defer c.mu.Unlock()

// Double-check (someone else might have deleted meanwhile)

if item2, ok := c.data[key]; ok && item2.expiry.Before(time.Now()) {

delete(c.data, key)

}

return nil, false

}

c.mu.RUnlock()

return item.value, true

}

// Delete removes a key-value pair from the cache.

func (c *Cache) Delete(key string) {

c.mu.Lock()

defer c.mu.Unlock()

delete(c.data, key)

}

// Clear removes all key-value pairs from the cache.

func (c *Cache) Clear() {

c.mu.Lock()

defer c.mu.Unlock()

c.data = make(map[string]CacheItem)

}

func main() {

cache := NewCache()

// Adding data to the cache with a TTL of 2 seconds

cache.Set("name", "mohit", 2*time.Second)

cache.Set("weight", 75, 5*time.Second)

// Retrieving data from the cache

if val, ok := cache.Get("name"); ok {

fmt.Println("Value for name:", val)

}

// Wait for some time to see expiry in action

time.Sleep(3 * time.Second)

// Retrieving expired data from the cache

if _, ok := cache.Get("name"); !ok {

fmt.Println("Name key has expired")

}

// Retrieving data before expiry

if val, ok := cache.Get("weight"); ok {

fmt.Println("Value for weight before expiry:", val)

}

// Wait for some time to see expiry in action

time.Sleep(3 * time.Second)

// Retrieving expired data from the cache

if _, ok := cache.Get("weight"); !ok {

fmt.Println("Weight key has expired")

}

// Deleting data from the cache

cache.Set("key", "val", 2*time.Second)

cache.Delete("key")

// Clearing the cache

cache.Clear()

time.Sleep(time.Second) // Sleep to allow cache operations to complete

}

With this expiry support, our cache implementation becomes more versatile and suitable for a wider range of caching scenarios.

Note: This is a basic implementation. In real world apps often use

sync.Map, eviction policies (LRU), or libraries like go-cache or ttlcache.

When you run the code:

gk@jarvis:~/exp/code/cache$ go run main.go

Value for name: mohit

Name key has expired

Value for weight before expiry: 75

Weight key has expired

By this we can verify it correctly storing and handling TTL.

Key Findings

We successfully implemented TTL support using a lazy expiration strategy.

This approach is efficient as it avoids background CPU usage when the cache is idle.

However, there is a major drawback: Memory Leak.

Keys that expire but are never accessed again will remain in the map forever.

To resolve this, we need a background cleanup process.

Using Go Generics

In this improved version, we will address the memory leak by introducing a background "janitor" process that periodically removes expired items.

Additionally, we'll leverage Go Generics (available since Go 1.18) to enforce type safety, eliminating the need for interface{} and runtime type assertions.

Structure

-

StartCleanup(interval): Launches a background goroutine that runs periodically (every

interval) to scan and remove expired items.func (c *Cache[K, T]) StartCleanup(interval time.Duration) { go func() { ticker := time.NewTicker(interval) defer ticker.Stop() for { select { case <-ticker.C: c.cleanup() case <-c.stopChan: return } } }() } -

StopCleanup(): Signals the background goroutine to stop, preventing goroutine leaks when the cache is no longer needed.

func (c *Cache[K, T]) StopCleanup() { close(c.stopChan) }

Implementation

package main

import (

"fmt"

"sync"

"time"

)

// Requires go >= 1.18

// CacheItem represents an item stored in the cache with its associated TTL.

type CacheItem[T any] struct {

value T

expiry time.Time

}

// Cache represents a thread-safe in-memory key-value store with expiry support.

type Cache[K comparable, T any] struct {

data map[K]CacheItem[T]

mu sync.RWMutex

stopChan chan struct{} // Channel to stop the cleanup goroutine

}

// NewCache creates and initializes a new Cache instance.

func NewCache[K comparable, T any]() *Cache[K, T] {

return &Cache[K, T]{

data: make(map[K]CacheItem[T]),

stopChan: make(chan struct{}),

}

}

// Set adds or updates a key-value pair in the cache with the given TTL.

func (c *Cache[K, T]) Set(key K, value T, ttl time.Duration) {

c.mu.Lock()

defer c.mu.Unlock()

c.data[key] = CacheItem[T]{

value: value,

expiry: time.Now().Add(ttl),

}

}

// Get retrieves the value associated with the given key.

func (c *Cache[K, T]) Get(key K) (T, bool) {

c.mu.RLock()

defer c.mu.RUnlock()

item, ok := c.data[key]

if !ok {

var zero T

return zero, false

}

// Check for expiry

if item.expiry.Before(time.Now()) {

// Note: We don't delete here to avoid upgrading lock (expensive).

// We prefer to let the janitor handle cleanup or return not found.

var zero T

return zero, false

}

return item.value, true

}

// Delete removes a key-value pair from the cache.

func (c *Cache[K, T]) Delete(key K) {

c.mu.Lock()

defer c.mu.Unlock()

delete(c.data, key)

}

// Len returns the number of items in the cache.

func (c *Cache[K, T]) Len() int {

c.mu.RLock()

defer c.mu.RUnlock()

return len(c.data)

}

// StartCleanup starts a background goroutine (janitor) that removes expired items every interval.

func (c *Cache[K, T]) StartCleanup(interval time.Duration) {

go func() {

ticker := time.NewTicker(interval)

defer ticker.Stop()

for {

select {

case <-ticker.C:

c.cleanup()

case <-c.stopChan:

return

}

}

}()

}

// StopCleanup stops the background cleanup goroutine.

func (c *Cache[K, T]) StopCleanup() {

close(c.stopChan)

}

// cleanup scans the cache and removes expired items.

func (c *Cache[K, T]) cleanup() {

c.mu.Lock()

defer c.mu.Unlock()

now := time.Now()

for key, item := range c.data {

if item.expiry.Before(now) {

delete(c.data, key)

}

}

}

func main() {

// Create a new cache for storing string keys and int values

cache := NewCache[string, int]()

// Start the background janitor to clean up every 1 second

cache.StartCleanup(1 * time.Second)

defer cache.StopCleanup() // Ensure cleanup stops when main exits

// Adding data

cache.Set("height", 175, 2*time.Second)

cache.Set("weight", 75, 5*time.Second)

fmt.Printf("Cache size: %d\n", cache.Len())

// Retrieve data

if val, ok := cache.Get("height"); ok {

fmt.Println("Value for height:", val)

}

// Wait for expiration

time.Sleep(3 * time.Second)

// Check if expired

if _, ok := cache.Get("height"); !ok {

fmt.Println("height key has expired")

}

// Wait specifically for cleanup to happen

time.Sleep(2 * time.Second)

// At this point, the "height" key should have been removed from the map entirely by the janitor.

fmt.Printf("Cache size post-cleanup: %d\n", cache.Len())

}

We get this following output

gk@jarvis:~/exp/code/cache/race-cache$ go run main.go

Cache size: 2

Value for height: 175

height key has expired

Cache size post-cleanup: 0

We can see cache size is 0 after the cleanup.

Testing

To help identify race conditions, it is recommended to run your code with the race detector enabled:

go run -race main.go

Note: The race detector is a powerful tool, but it does not guarantee that all concurrency bugs are caught. It only detects race conditions that strictly occur during the execution.

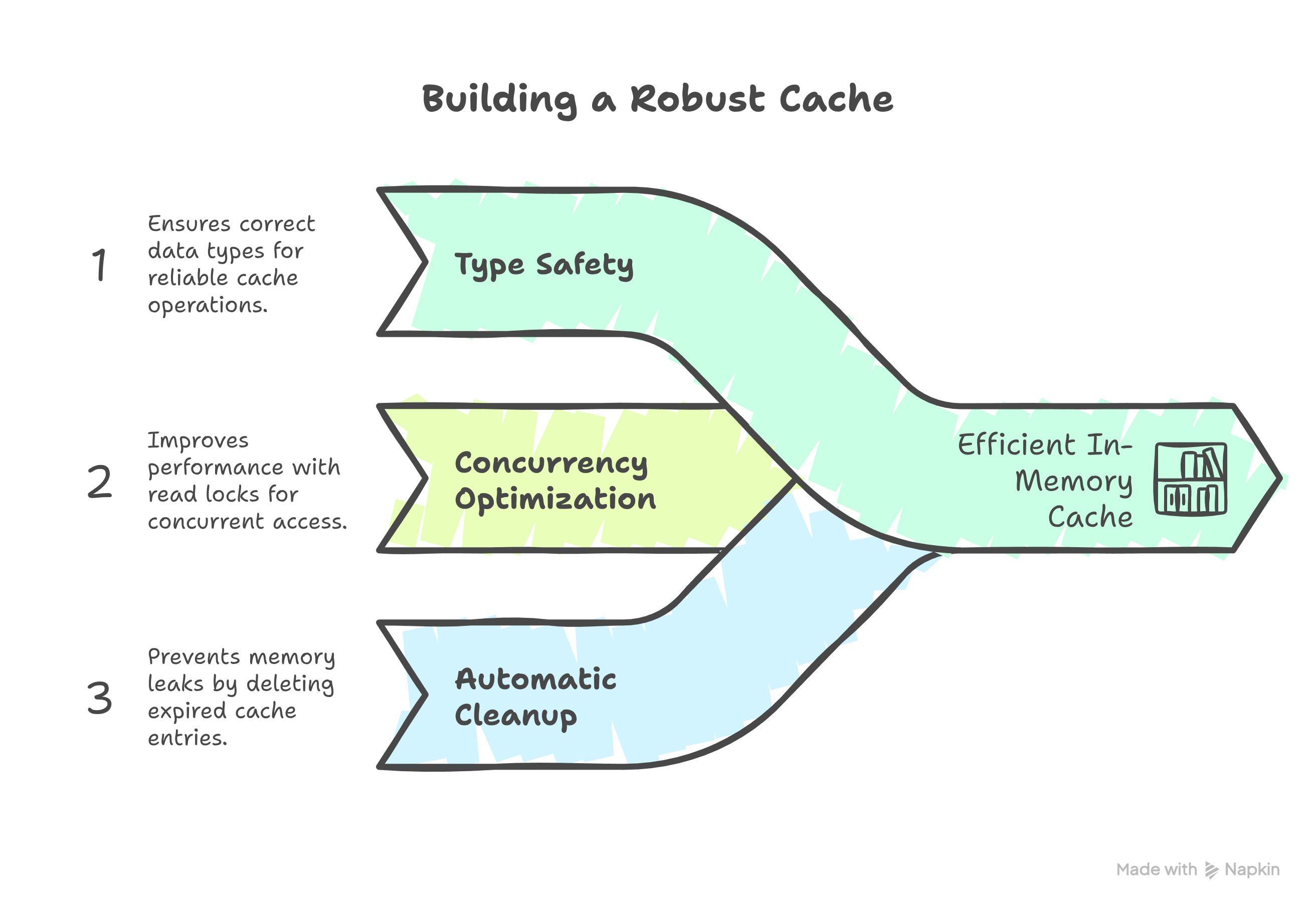

Key Improvements

- Type Safety: No more

interface{}. The compiler ensures you only store/retrieve the correct types. - Concurrency Optimization:

Get()usesRLock(Read Lock) for better performance under load. - Automatic Cleanup: The

StartCleanupmethod runs a background loop to delete expired keys, preventing memory leaks from stale data.

Conclusion

Now we have designed an In-Memory Cache that handles expiry.

With TTL-based lazy expiration, we strike a practical balance: fresh data when needed, reduced database load most of the time, and simple code.

Sources

I’ve been building FreeDevTools for developers.

It is a curated collection of cheatsheets, Manual Pages, MCPs, SVGs, PNGs, and various frequently used tools crafted to simplify workflows and reduce friction.

My goal is to save you time by providing the materials you need for your daily work in one place, so you don't have to hunt across multiple websites.

It’s online, open-source, and ready for anyone to use. Any feedback or contributions are welcome!

👉 Check it out: FreeDevTools

⭐ Star it on GitHub: freedevtools