Stop Paying for LLMs — Run Your Own Locally

If you’re tired of hitting limits, waiting on slow responses, or paying monthly just to use an online model, there’s a better option. With Ollama, you can run powerful LLMs directly on your own machine.

If you’re trying to get something done with an online LLM model but keep hitting daily limits, slow responses, or rate-limits, there’s another way. You can run these models directly on your own machine.

The only catch: you need a decent amount of RAM and ideally a GPU. But once it’s set up, you get full control, no limits, and everything works offline.

In this article, we’ll walk through how to set up Ollama, the tool that lets you run models locally. We’ll look at its useful features, the commands you need to know, how to pick the right model for each task, and how to connect it with other tools so you can get your work done entirely on your machine.

System Requirements

Before installing Ollama, it’s good to check whether your machine has enough resources to run local models smoothly. The better the hardware, the faster and more responsive the models will feel.

Hardware Requirements

CPU

- Minimum: 64-bit processor with 2+ cores

- Recommended: 4+ cores for smoother performance

Memory (RAM)

- Minimum: 8GB RAM

- Recommended: 16GB+ RAM, especially for models above 7B

Note: Larger models require more RAM to run efficiently

Storage

- Ollama installation: Around 4GB for the app and dependencies

- Model storage: Depends on the model size

- Small models (1–7B): ~1–8GB each

- Medium models (13–70B): ~8–80GB each

- Large models (70B+): 80GB+

Setting up Ollama

Setting up Ollama is pretty straightforward. On Linux, you can install it by running the one-line installer.

For Windows and macOS, you can download and install the official packages from the Ollama website.

Check out the download page for more details.

Once Ollama is running, it will automatically start a local server on port 11434. With that in place, the base setup is done.

Your next step is to pull a model that suits your task and start using it locally.

The Best Local Model for Your Task

You can download the model that fits your task using the command:

ollama pull <model-name>

Different models serve different purposes. Here’s a simple comparison of popular local models you can pull with Ollama.

1. Gemma — 2B / 7B / 9B

- Pull size:

- 2B (

gemma:2b) → ~1.5 GB - 7B (

gemma:7b) → ~4.4 GB - 9B (

gemma2:9b) → ~5.2 GB

- 2B (

- Best for: Quick answers, lightweight tasks, everyday writing

- Why: Small, fast, and works well even without a strong GPU.

2. Qwen — 1.8B → 72B

- Pull size:

- 1.8B (

qwen:1.8b) → ~1.1 GB - 7B (

qwen:7b) → ~4.5 GB - 14B (

qwen:14b) → ~8.8 GB - 32B (

qwen:32b) → ~18 GB - 72B (

qwen:72b) → ~41 GB

- 1.8B (

- Best for: Long-form writing, complex tasks, multilingual work

- Why: Strong at summarizing, reasoning, and long context.

3. Phi-3 — 3.8B / 14B

- Pull size:

- Mini (3.8B) (

phi3:mini) → ~2.2 GB - Medium (14B) (

phi3:medium) → ~7.8 GB

- Mini (3.8B) (

- Best for: Laptops without GPUs, small projects, quick coding help

- Why: Lightweight and efficient, good for low-resource devices.

4. Mistral & Mixtral

- Pull size:

- Mistral 7B (

mistral:7b) → ~4.1 GB - Mixtral 8x7B (

mixtral:8x7b) → ~26 GB

- Mistral 7B (

- Best for:

- Mistral 7B: Structured output, reasoning, balanced performance

- Mixtral 8x7B: High-end performance, complex reasoning, handling nuanced tasks (requires a powerful GPU)

- Why:

- Mistral: Stable and well-rounded with a great quality–speed balance.

- Mixtral: A "Mixture of Experts" (MoE) model that is significantly more powerful, but also much larger.

5. Llama 3 — 8B / 70B

- Pull size:

- 8B (

llama3:8b) → ~4.7 GB - 70B (

llama3:70b) → ~39–40 GB

- 8B (

- Best for: Coding, debugging, deeper reasoning tasks

- Why: Strong all-around performance, especially in coding and problem-solving.

6. Codestral — 22B & Qwen Coder — 1.5B → 32B

- Pull size:

- Codestral 22B (

codestral:22b) → ~12–13 GB - Qwen Coder 1.5B (

qwen2.5-coder:1.5b) → ~986 MB - Qwen Coder 7B (

qwen2.5-coder:7b) → ~4.5 GB - Qwen Coder 32B (

qwen2.5-coder:32b) → ~20 GB

- Codestral 22B (

- Best for: Code generation, refactoring, explaining code

- Why: Built specifically for software development workflows.

Customize Ollama for Your Use Case

Once you get the basics running, you can shape Ollama to work exactly the way you want.

You can create custom models, tune performance, adjust memory usage, or even change how Ollama runs in the background.

Here are the most useful ways to personalize your setup.

Build Your Own Custom Model

If you want a model that behaves in a specific way — for example, a coding assistant that always follows best practices — you can create one using a Modelfile.

This lets you:

- Choose a base model

- Adjust generation settings

- Add a system message

- Define a custom template

Here’s a simple example of a coding-focused custom Modelfile:

FROM codellama:7b

PARAMETER temperature 0.1

PARAMETER top_p 0.9

PARAMETER repeat_penalty 1.1

SYSTEM """

You are a senior engineer specializing in clean, efficient code.

Always include error handling, linting proper comments and follow best practices.

Explain complex concepts in simple terms.

"""

TEMPLATE """{{ if .System }}<|system|>

{{ .System }}<|end|>

{{ end }}{{ if .Prompt }}<|user|>

{{ .Prompt }}<|end|>

{{ end }}<|assistant|>

{{ .Response }}<|end|>

"""

Once done with modelfile run:

ollama create coding-expert -f Modelfile.coding

This gives you a model fine-tuned for cleaner and more consistent code output.

Speed Up Models with Quantization

Quantization reduces model size and speeds up inference without a big drop in quality.

It’s one of the easiest ways to boost performance.

Check available quantization levels:

ollama show llama2 --quantization

Create a quantized model:

ollama create llama2-q4 --quantize q4_0 -f Modelfile.llama2

Check model sizes:

ollama list | grep llama2

Tune the Ollama Server to Your Needs

You can also configure the Ollama local server which running in the background

# Enable detailed logging

OLLAMA_DEBUG=1 ollama serve

# Change host and port

OLLAMA_HOST=0.0.0.0:11435 ollama serve

# Use a custom models directory

OLLAMA_MODELS=/custom/path/models ollama serve

# Pick a specific GPU

CUDA_VISIBLE_DEVICES=0 ollama serve

Hidden Settings for Power Users

These environment variables aren’t obvious but give you deeper control:

# Allow more parallel requests

export OLLAMA_NUM_PARALLEL=4

# Change context length

export OLLAMA_CONTEXT_SIZE=8192

# Enable experimental features

export OLLAMA_EXPERIMENTAL=true

# Control GPU memory usage

export OLLAMA_GPU_MEMORY_FRACTION=0.8

# Adjust request timeout

export OLLAMA_REQUEST_TIMEOUT=300

These are great for heavy workloads or server-style setups.

Manage Memory and Performance

You can customize how much memory Ollama uses.

# Check active models and memory usage

ollama ps

# Preload a model for faster startup

ollama run llama2 --preload

# Limit memory usage

ollama run llama2 --memory-limit 4GB

# Remove unused models

ollama rm --force unused-model

Get the Most Out of Your GPU

If you have an NVIDIA GPU, you can squeeze out more performance with a few settings:

# Monitor GPU usage

nvidia-smi -l 1

# Split GPU memory across models

export OLLAMA_GPU_SPLIT=true

# Enable tensor parallelism

export OLLAMA_TENSOR_PARALLEL=2

# CUDA debugging (optional)

export CUDA_LAUNCH_BLOCKING=1

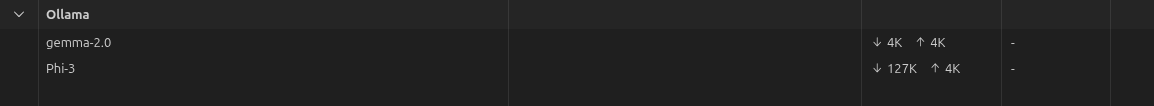

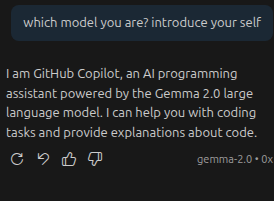

Using Local LLMs as a Coding Agent

One of the best ways to use local LLMs in VS Code as a coding agent is to integrate them with GitHub Copilot. GitHub Copilot supports local LLMs directly within its chat/agent window.

To enable this, follow these steps:

-

First, make sure Ollama is running.

-

If it's not, run the ollama serve command in your terminal.

-

Then, in VS Code, go to the GitHub Copilot extension settings.

-

Navigate to "Manage Models" and enable the Ollama models available on your system.

Once its done you can interact freely in the chat window

Get Everything Out of the Box

We discussed how to run Ollama and explored a few configurations you can tweak to get the best performance and speed up your daily tasks.

We introduced Ollama into our workflow to solve a bulk-processing LLM problem while working on freedevtool, a platform that provides over 125k free developer resources. To streamline the workflow, we are building a CLI tool called dprompts, which helps teams distribute large batches of LLM tasks across the local machines of developers and aggregate the results in a database. This approach removes the dependency on paid cloud LLMs and eliminates worries about cost, quotas, and rate limits.

You can learn more about dprompts and how to set it up by visiting the project’s GitHub repository and checking the README.